Example 2: A Hybrid Neuroptimiser with a problem from COCO¶

This example demonstrates how to use the Neuroptimiser library to solve an optimisation problem from the COCO framework. The problem is defined by its ID and instance, and the Neuroptimiser is configured with a set of parameters for the agents.

1. Setup¶

Import necessary libraries and set up the environment for plotting.

# Import necessary libraries

import os

import shutil

from copy import deepcopy

import random

import seaborn as sns

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

from matplotlib.colors import ListedColormap

from neuroptimiser import NeurOptimiser

import cocoex as ex

# Set up the plotting style and parameters

width = 6.5

height = width * 0.618

res_dpi = 333

seed = 42

random.seed(seed)

np.random.seed(seed)

root_figures = "./figures/"

plt.style.context('paper')

sns.set_style("ticks")

plt.rcParams['font.family'] = 'serif'

plt.rcParams['axes.labelsize'] = 11

plt.rcParams['xtick.labelsize'] = 11

plt.rcParams['ytick.labelsize'] = 11

plt.rcParams['legend.fontsize'] = 11

plt.rcParams['axes.grid'] = False

plt.rcParams['axes.spines.top'] = True

plt.rcParams['axes.spines.right'] = True

plt.rcParams['axes.edgecolor'] = 'black'

plt.rcParams['xtick.color'] = 'black'

plt.rcParams['ytick.color'] = 'black'

plt.rcParams['axes.labelcolor'] = 'black'

if shutil.which("pdflatex") is not None:

plt.rcParams['text.usetex'] = True

plt.rcParams['font.serif'] = ['Computer Modern Roman']

else:

plt.rcParams['text.usetex'] = False

def save_this(fig, _in=""):

if is_saving:

os.makedirs(f"{root_figures}/" + _in, exist_ok=True)

fig.savefig(root_figures + _in + "/" + prefix_filename + ".png", transparent=True, dpi=res_dpi, bbox_inches="tight")

else:

plt.show()

2. Parameter definition¶

In this section, we define the problem parameters, including the number of steps, problem ID, instance, dimensions. In addition, we set the custom parameters for the Neuroptimiser, which will replace the default parameters of the spiking core.

# Define the problem parameters

num_steps = 500 # Number of iterations

problem_id = 15 # Problem ID from the BBOB framework

problem_ins = 1 # Problem instance

num_dimensions = 2 # Number of dimensions for the problem

num_agents = 30 # Number of agents/units in the Neuroptimiser

num_neighbours = 15 # Number of neighbours for each agent/unit

coco_suite = "bbob-noisy"

# Define the Neuroptimiser parameters

neuropt_name = "neuropt_Ex2-Hyb"

config_params = dict(

num_iterations = num_steps,

num_agents = num_agents,

spiking_core = "TwoDimSpikingCore",

num_neighbours = num_neighbours,

neuron_topology = "2dr",

unit_topology = "random",

)

# Proportion of custom spiking cores

proportion_dist = [0.8, 0.2]

neuropt_custom_params = [ # Specify the custom parameters for the spiking cores

{"name": "linear",

"coeffs": "random",

"spk_cond": "l2",

"hs_operator": "differential",

"hs_variant": "current-to-best",

"sel_mode": "greedy",

},

{"name": "izhikevich",

"coeffs": "random",

"spk_cond": "l1",

"hs_operator": "differential",

"hs_variant": "rand-to-best",

"sel_mode": "metropolis",

},

# {"name": "izhikevich",

# "coeffs": "random",

# "spk_cond": "fixed",

# "hs_operator": "differential",

# "hs_variant": "current-to-pbest",

# },

]

# Additional parameters

is_saving = True # Whether to save the figures or not

prefix_filename = (f"{neuropt_name}_"

f"{problem_id}p_"

f"{problem_ins}i_"

f"{num_dimensions}d_"

f"{num_steps}s_"

f"{num_agents}u")

plt.close()

if is_saving:

print(f"prefix_filename: '{prefix_filename}'")

prefix_filename: 'neuropt_Ex2-Hyb_15p_1i_2d_500s_30u'

Process the default parameters for the spiking core and applies any custom parameters specified in neuropt_custom_params.

# Set up the default core parameters for the Neuroptimiser

default_core_params = dict(

alpha = 1.0,

dt = 0.01,

max_steps = num_steps,

noise_std = (0.0, 0.3),

ref_mode = "pgn",

is_bounded = True,

name = "linear",

coeffs = "random",

approx = "rk4",

thr_mode = "fixed",

thr_alpha = 1.0,

thr_min = 1e-6,

thr_max = 1.0,

thr_k = 0.05,

spk_cond = "fixed",

spk_alpha = 0.25,

hs_operator = "fixed",

hs_variant = "current-to-rand",

)

# Process the custom parameters for the Neuroptimiser

if neuropt_custom_params is None:

neuropt_custom_params = [{}]

custom_params_indices = np.random.choice(a=range(len(neuropt_custom_params)), size=num_agents, p=proportion_dist)

core_params = []

for i in range(num_agents):

p = deepcopy(default_core_params)

override = neuropt_custom_params[custom_params_indices[i]]

# override = neuropt_custom_params[i % len(neuropt_custom_params)]

p.update(override)

core_params.append(p)

3. Problem setup and optimisation¶

We first retrieve the problem from the IOH framework.

# Get the problem from the COCO framework

observer = ex.Observer("bbob", "result_folder: %s_on_%s" % (neuropt_name, "bbob2009"))

suite = ex.Suite(coco_suite, "", f"dimensions:{num_dimensions} instance_indices:{problem_ins}")

problem = suite.get_problem(problem_id)

problem.observe_with(observer)

# problem.free()

print(problem)

COCO INFO: Results will be output to folder exdata/neuropt_Ex2-Hyb_on_bbob2009-0088

bbob_noisy_f116_i01_d02: a 2-dimensional single-objective problem (problem 225 of suite "b'bbob-noisy'" with name "bbob(gaussian_noise_model(BBOB-NOISY suite problem f116 instance 1 in 2D))")

Then, we instantiate the Neuroptimiser with the defined parameters and solve the problem. The debug_mode is set to True to enable detailed logging of the optimisation process.

# Instantiation

optimiser = NeurOptimiser(config_params, core_params)

# Solve the problem

optimiser.solve(problem, debug_mode=True)

[neuropt:log] Debug mode is enabled. Monitoring will be activated.

[neuropt:log] Parameters are set up.

[neuropt:log] Initial positions and topologies are set up.

[neuropt:log] Tensor contraction layer, neighbourhood manager, and high-level selection unit are created.

[neuropt:log] Population of nheuristic units is created.

[neuropt:log] Connections between nheuristic units and auxiliary processes are established.

[neuropt:log] Monitors are set up.

[neuropt:log] Starting simulation with 500 iterations...

... step: 0, best fitness: 893.1510620117188

... step: 50, best fitness: -54.93385314941406

... step: 100, best fitness: -54.93385314941406

... step: 150, best fitness: -54.93999099731445

... step: 200, best fitness: -54.93999099731445

... step: 250, best fitness: -54.93999099731445

... step: 300, best fitness: -54.939998626708984

... step: 350, best fitness: -54.939998626708984

... step: 400, best fitness: -54.939998626708984

... step: 450, best fitness: -54.939998626708984

... step: 499, best fitness: -54.939998626708984

[neuropt:log] Simulation completed. Fetching monitor data... done

(array([-1.72700867, -1.50787055]), -54.939998626708984)

# Show the overall configuration parameters of the optimiser

print(optimiser.config_params)

{'num_iterations': 500, 'num_agents': 30, 'spiking_core': 'TwoDimSpikingCore', 'num_neighbours': 15, 'neuron_topology': '2dr', 'unit_topology': 'random', 'num_dimensions': 2, 'seed': 69, 'search_space': array([[-5., 5.],

[-5., 5.]]), 'function': <function AbstractSolver._rescale_problem.<locals>.scaled_problem at 0x121327b50>, 'core_params': {}}

# Show the core parameters used in the optimisation

pd.DataFrame(optimiser.core_params)

| alpha | dt | max_steps | noise_std | ref_mode | is_bounded | name | coeffs | approx | thr_mode | ... | spk_cond | spk_alpha | hs_operator | hs_variant | sel_mode | spk_q_ord | hs_params | spk_weights | seed | init_position | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 69 | [0.21508970380287673, -0.6589517526254169] |

| 1 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | izhikevich | random | rk4 | fixed | ... | l1 | 0.25 | differential | rand-to-best | metropolis | 2 | {} | [0.5, 0.5] | 70 | [-0.869896814029441, 0.8977710745066665] |

| 2 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 71 | [0.9312640661491187, 0.6167946962329223] |

| 3 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 72 | [-0.39077246165325863, -0.8046557719872323] |

| 4 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 73 | [0.3684660530243138, -0.1196950125207974] |

| 5 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 74 | [-0.7559235303104423, -0.00964617977745963] |

| 6 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 75 | [-0.9312229577695632, 0.8186408041575641] |

| 7 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | izhikevich | random | rk4 | fixed | ... | l1 | 0.25 | differential | rand-to-best | metropolis | 2 | {} | [0.5, 0.5] | 76 | [-0.48244003679996617, 0.32504456870796394] |

| 8 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 77 | [-0.3765778478211781, 0.040136042355621626] |

| 9 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 78 | [0.0934205586865593, -0.6302910889489459] |

| 10 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 79 | [0.9391692555291171, 0.5502656467222291] |

| 11 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | izhikevich | random | rk4 | fixed | ... | l1 | 0.25 | differential | rand-to-best | metropolis | 2 | {} | [0.5, 0.5] | 80 | [0.8789978831283782, 0.7896547008552977] |

| 12 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | izhikevich | random | rk4 | fixed | ... | l1 | 0.25 | differential | rand-to-best | metropolis | 2 | {} | [0.5, 0.5] | 81 | [0.19579995762217028, 0.8437484700462337] |

| 13 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 82 | [-0.823014995896161, -0.6080342751617096] |

| 14 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 83 | [-0.9095454221789239, -0.3493393384734713] |

| 15 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 84 | [-0.22264542062103598, -0.4573019364522082] |

| 16 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 85 | [0.6574750183038587, -0.28649334661282144] |

| 17 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 86 | [-0.4381309806252385, 0.08539216631649693] |

| 18 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 87 | [-0.7181515500504747, 0.6043939615080793] |

| 19 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 88 | [-0.8508987126404584, 0.9737738732010346] |

| 20 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 89 | [0.5444895385933148, -0.6025686369316552] |

| 21 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 90 | [-0.9889557657527952, 0.6309228569096683] |

| 22 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 91 | [0.41371468769523423, 0.4580143360819746] |

| 23 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 92 | [0.5425406933718915, -0.8519106965318193] |

| 24 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 93 | [-0.2830685429114548, -0.7682618809497406] |

| 25 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 94 | [0.726206851751187, 0.24659625365511584] |

| 26 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 95 | [-0.3382039502947016, -0.8728832994279527] |

| 27 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 96 | [-0.3780353565686756, -0.3496333559465059] |

| 28 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 97 | [0.45921235667612814, 0.27511494271042625] |

| 29 | 1.0 | 0.01 | 500 | (0.0, 0.3) | pgn | True | linear | random | rk4 | fixed | ... | l2 | 0.25 | differential | current-to-best | greedy | 2 | {} | [0.5, 0.5] | 98 | [0.7744254851526531, -0.05557014967610141] |

30 rows × 24 columns

4. Results processing and visualisation¶

In this section, we process the results of the optimisation and visualise them using various plots. The results include the fitness values, agent positions, and phase portraits.

# Recover the results from the optimiser

fp = optimiser.results["fp"]

fg = optimiser.results["fg"]

positions = np.array(optimiser.results["p"])

best_position = np.array(optimiser.results["g"])

v1 = np.array(optimiser.results["v1"])

v2 = np.array(optimiser.results["v2"])

# Convert the spikes to integer type

spikes = np.array(optimiser.results["s"]).astype(int)

# Print some minimal information about the results

print(f"fg: {fg[-1][0]:.4f}")

print(f"{v1.min():.4f} <= v1 <= {v1.max():.4f}")

print(f"{v2.min():.4f} <= v2 <= {v2.max():.4f}")

fg: -54.9400

-1.1349 <= v1 <= 1.5066

-1.1349 <= v2 <= 1.5066

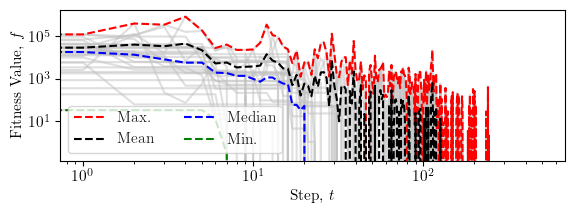

Error convergence¶

This plot shows the convergence of the absolute error in fitness values over the iterations of the optimisation process.

fig, ax = plt.subplots(figsize=(width*0.9, height/1.8))

plt.plot(fp, color="silver", alpha=0.5)

plt.plot(np.max(fp, axis=1), '--', color="red", label=r"Max.")

plt.plot(np.average(fp, axis=1), '--', color="black", label=r"Mean")

plt.plot(np.median(fp, axis=1), '--', color="blue", label=r"Median")

plt.plot(fg, '--', color="green", label=r"Min.")

plt.xlabel(r"Step, $t$")

plt.ylabel(r"Fitness Value, $f$")

lgd = plt.legend(ncol=2, loc="lower left")

plt.xscale("log")

plt.yscale("log")

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="fitness")

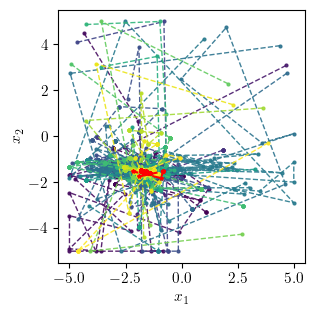

Position evolution in 2D¶

This plot shows the evolution of the unit positions in the 2D space over the iterations of the optimisation process.

fig, ax = plt.subplots(figsize=(width/2, width/2))

cmap = plt.get_cmap('viridis', num_agents)

color = cmap(np.linspace(0, 1, num_agents))

for agent, c in enumerate(color):

plt.plot(positions[:, agent, 0], positions[:,agent, 1], "--o",

color=c, alpha=0.9, markersize=2, linewidth=1,

label=f"Agent {agent}")

plt.plot(best_position[:, 0], best_position[:, 1], "--*",

color="red", markersize=3, label="Best position")

# plt.legend()

plt.xlabel(r"$x_1$")

plt.ylabel(r"$x_2$")

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="positions_2d")

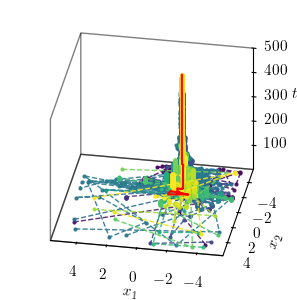

Position evolution in 3D¶

This plot shows the evolution of the unit positions in the 3D space over the iterations of the optimisation process.

fig = plt.figure(figsize=(width/2, width/2))

ax = fig.add_subplot(111, projection='3d')

ax.set_proj_type('ortho')

cmap = plt.get_cmap('viridis', num_agents)

color = cmap(np.linspace(0, 1, num_agents))

steps = np.arange(optimiser._num_iterations) + 1

for agent, c in enumerate(color):

ax.plot3D(positions[:, agent, 0], positions[:, agent, 1], steps,

"--o", color=c, alpha=0.9, markersize=2, linewidth=1,

label=f"Agent {agent}")

ax.plot3D(best_position[:, 0], best_position[:, 1], steps,

"-", color="red", markersize=2, linewidth=1.5,

label="Best position")

for axis in [ax.xaxis, ax.yaxis, ax.zaxis]:

axis.pane.set_edgecolor('black')

axis.pane.set_linewidth(1.0)

# ax.viewfig, _init(elev=35, azim=135)

ax.view_init(elev=30, azim=100)

# ax.legend()

ax.set_xlabel(r"$x_1$", labelpad=1)

ax.set_ylabel(r"$x_2$", labelpad=1)

ax.set_zlabel(r"$t$", labelpad=0)

ax.set_box_aspect([1, 1, 0.8])

ax.set_zlim(1, num_steps)

ax.grid(False)

ax.xaxis.pane.fill = False

ax.yaxis.pane.fill = False

ax.zaxis.pane.fill = False

ax.patch.set_alpha(0)

fig.subplots_adjust(left=0.1, right=0.95, top=0.95, bottom=0.1)

# fig.tight_layout()

save_this(fig, _in="positions_3d")

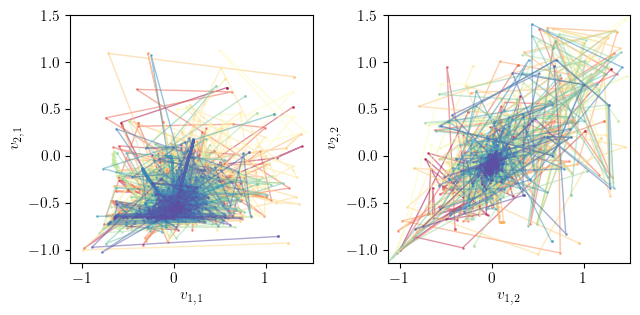

Phase portrait¶

This section visualises the phase portrait of the optimisation process in 2D. The phase portrait shows the trajectory of each agent in the 2D space over the iterations.

fig, axs = plt.subplots(nrows=np.ceil(num_dimensions / 2).astype(int),

ncols=2, figsize=(width, width/2))

steps = np.arange(optimiser._num_iterations) + 1

cmap = plt.get_cmap('Spectral', num_agents)

color = cmap(np.linspace(0, 1, num_agents))

for i, ax in enumerate(axs.flatten()):

for agent, c in enumerate(color):

ax.plot(v1[agent, :, i], v2[agent, :, i], "-o",

color=c, alpha=0.5, markersize=1, linewidth=1,

label=f"Agent {agent}")

ax.plot(v1[agent, 0, i], v2[agent, 0, i], "-s",

color=c, alpha=0.5, markersize=1, linewidth=1,

label=f"Agent {agent}")

ax.set_xlabel(r"$v_{}$".format("{1," + str(i+1) + "}"))

ax.set_ylabel(r"$v_{}$".format("{2," + str(i+1) + "}"))

ax.set_xlim(v1.min(), v1.max())

ax.set_ylim(v2.min(), v2.max())

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="portrait_2d")

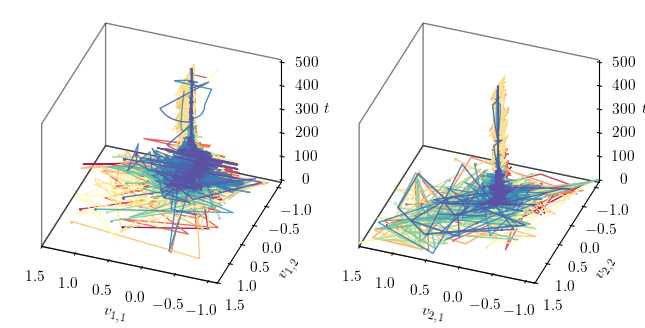

Phase portrait in 3D¶

This section visualises the phase portrait of the optimisation process in 3D. The phase portrait shows the trajectory of each agent in the 3D space over the iterations.

fig = plt.figure(figsize=(width, width/2))

num_rows = np.ceil(num_dimensions / 2).astype(int)

num_cols = 2

axs = [

fig.add_subplot(

num_rows, num_cols, i + 1, projection='3d'

) for i in range(num_dimensions)

]

steps = np.arange(optimiser._num_iterations) + 1

cmap = plt.get_cmap('Spectral', num_agents)

color = cmap(np.linspace(0, 1, num_agents))

for i, ax in enumerate(axs):

ax.set_proj_type('ortho')

ax.set_box_aspect([1, 1, 0.8])

ax.view_init(elev=35, azim=110)

for agent, c in enumerate(color):

ax.plot3D(v1[agent, :, i], v2[agent, :, i], steps, "-",

color=c, alpha=0.9,

markersize=1, linewidth=1,

label=f"Agent {agent}")

ax.plot3D(v1[agent, 0, i], v2[agent, 0, i], 0, "-s",

color=c, alpha=0.9,

markersize=1, linewidth=1,

label=f"Agent {agent}")

ax.set_xlabel(r"$v_{}$".format("{" + str(i+1) + ",1}"))

ax.set_ylabel(r"$v_{}$".format("{" + str(i+1) + ",2}"))

ax.set_zlabel(r"$t$", labelpad=0.1)

ax.set_xlim(v1.min(), v1.max())

ax.set_ylim(v2.min(), v2.max())

for axis in [ax.xaxis, ax.yaxis, ax.zaxis]:

axis.pane.set_edgecolor('black')

axis.pane.set_linewidth(1.0)

ax.grid(False)

ax.xaxis.pane.fill = False

ax.yaxis.pane.fill = False

ax.zaxis.pane.fill = False

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="portrait_3d")

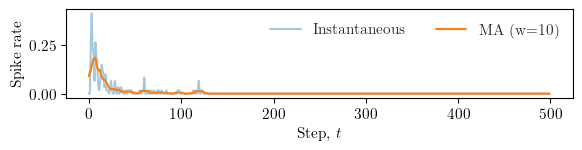

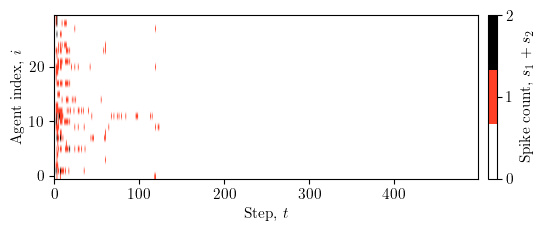

Spike activity heatmap¶

This section visualises the spike activity of the agents over the iterations of the optimisation process. The heatmap shows the summed spike counts across all dimensions for each agent at each step.

fig, ax = plt.subplots(figsize=(width*0.9, height * 0.6))

spikes_sum = np.sum(spikes, axis=2)

cmap = plt.get_cmap("CMRmap_r", 3)

im = ax.imshow(spikes_sum.T, aspect='auto', origin='lower',

cmap=cmap, vmin=0, vmax=2)

ax.set_xlabel(r"Step, $t$")

ax.set_ylabel(r"Agent index, $i$")

cbar = fig.colorbar(im, ax=ax, pad=0.02, ticks=[0, 1, 2])

cbar.set_label(r"Spike count, $s_{1}+s_{2}$")

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="spikes_hm")

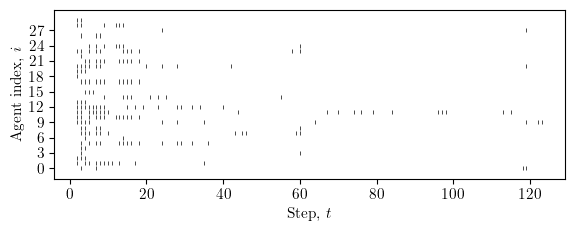

fig, ax = plt.subplots(figsize=(width*0.9, height*0.6))

# spikes: (T, A, D) -> per-agent list of spike times

events = []

for i in range(num_agents):

# any spike on any dimension at step t?

t_idx = np.where(spikes[:, i, :].any(axis=1))[0]

events.append(t_idx)

# eventplot expects a list of 1D arrays

ax.eventplot(events, orientation='horizontal', linelengths=0.8, linewidths=0.5, colors='black')

ax.set_xlabel(r"Step, $t$")

ax.set_ylabel(r"Agent index, $i$")

ax.set_ylim(-2.0, num_agents + 1.0)

ax.set_yticks(np.arange(0, num_agents, max(1, num_agents // 10)))

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="spikes_raster_agents")

window = max(5, num_steps // 50) # choose a small window

# mean activity per step (across agents and dims)

rate = spikes.mean(axis=(1, 2)).astype(float)

# simple moving average

kernel = np.ones(window) / window

rate_ma = np.convolve(rate, kernel, mode='same')

fig, ax = plt.subplots(figsize=(width*0.9, height*0.4))

ax.plot(np.arange(num_steps), rate, alpha=0.4, label="Instantaneous")

ax.plot(np.arange(num_steps), rate_ma, linewidth=1.5, label=f"MA (w={window})")

ax.set_xlabel(r"Step, $t$")

ax.set_ylabel(r"Spike rate")

ax.legend(loc="upper right", ncol=2, frameon=False)

ax.patch.set_alpha(0)

fig.tight_layout()

save_this(fig, _in="spikes_rate_ma")